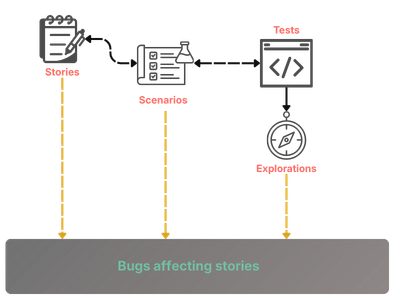

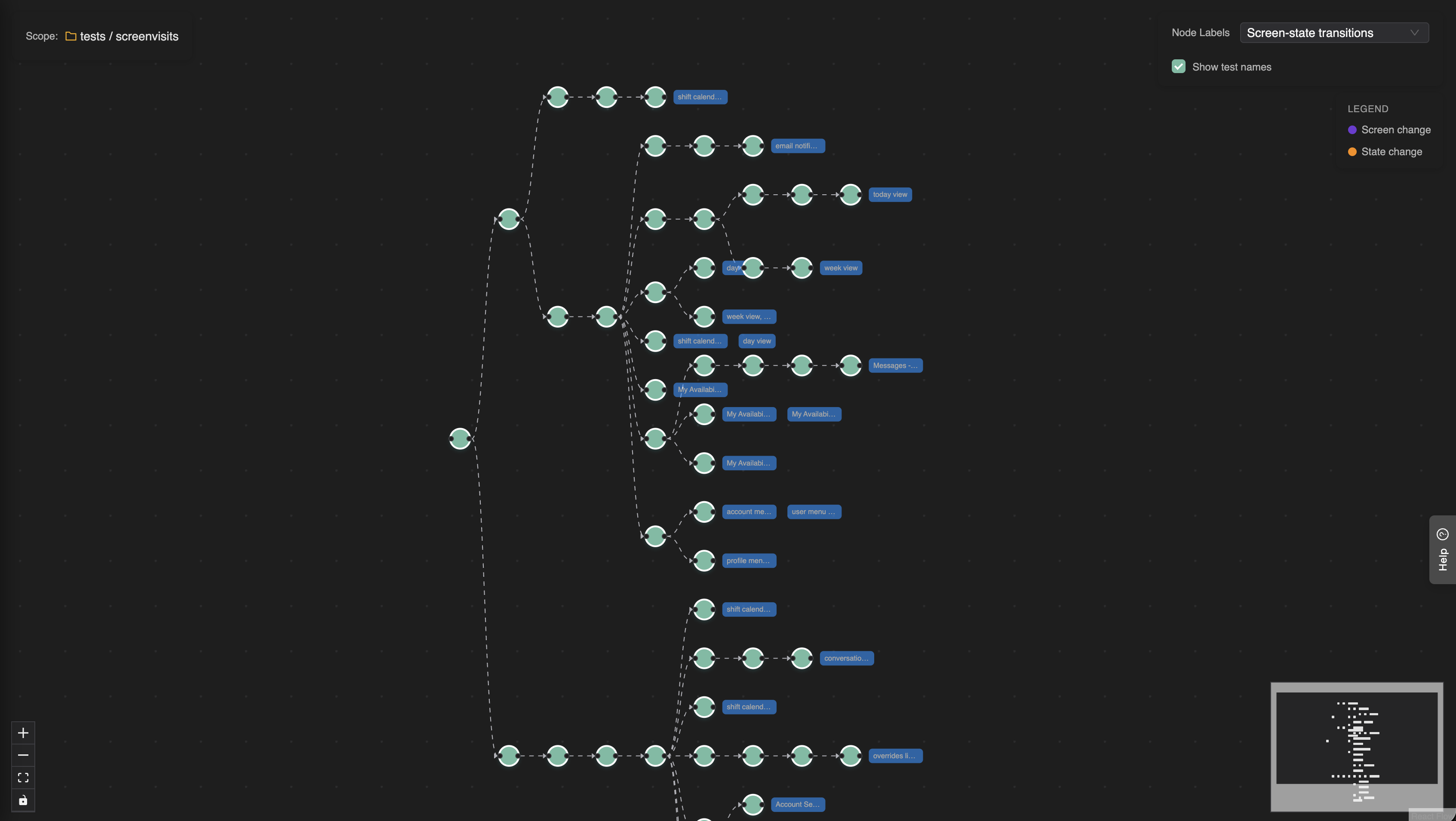

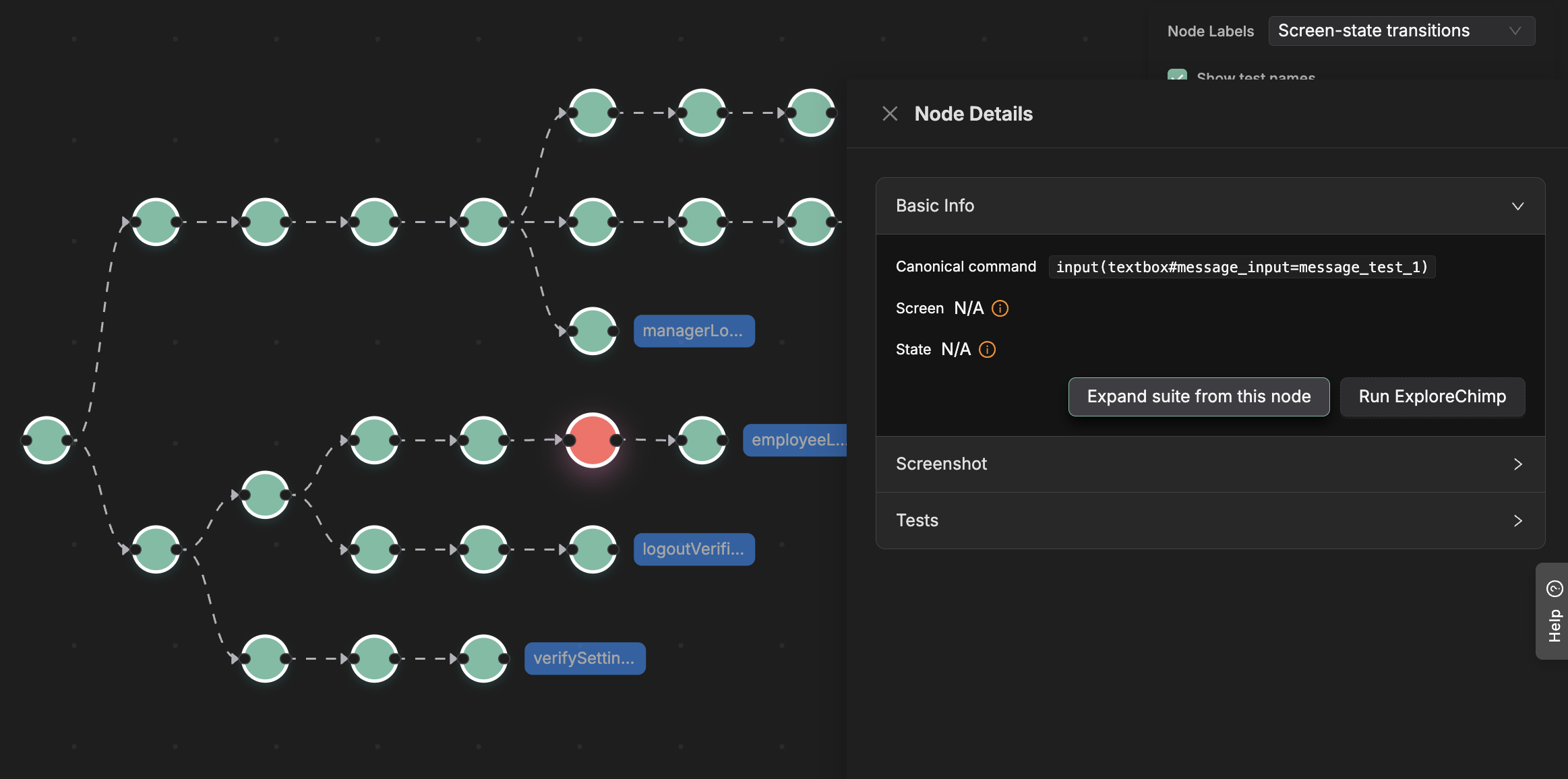

End-to-end tests are ultimately just a sequence of user actions and expectation checks. Conceptually, each test is a walk through your app

Goto url -> Login -> Go to Settings Page -> Update role -> Verify role is updated

You can represent this as a path: every step is a node, and the edges show how the user moves from one step to the next.

Now imagine aggregating all the paths from all the tests in your suite. You end up with a tree-like structure—essentially a map of every known pathway through your product.

This isn’t just a “cool visualization.”

It unlocks powerful, practical applications – especially when using AI agents for testing.

Better RAG for Testing Agents

This tree acts as a graph index over your product’s behavioural pathways.Just like a database index accelerates queries, this structure enables an agent to answer deeper questions about your app’s behaviour – making retrieval-augmented reasoning much more effective.With it, an agent doesn’t have to hallucinate how the app works.It can look up structure, pathways, and reachable states deterministically.

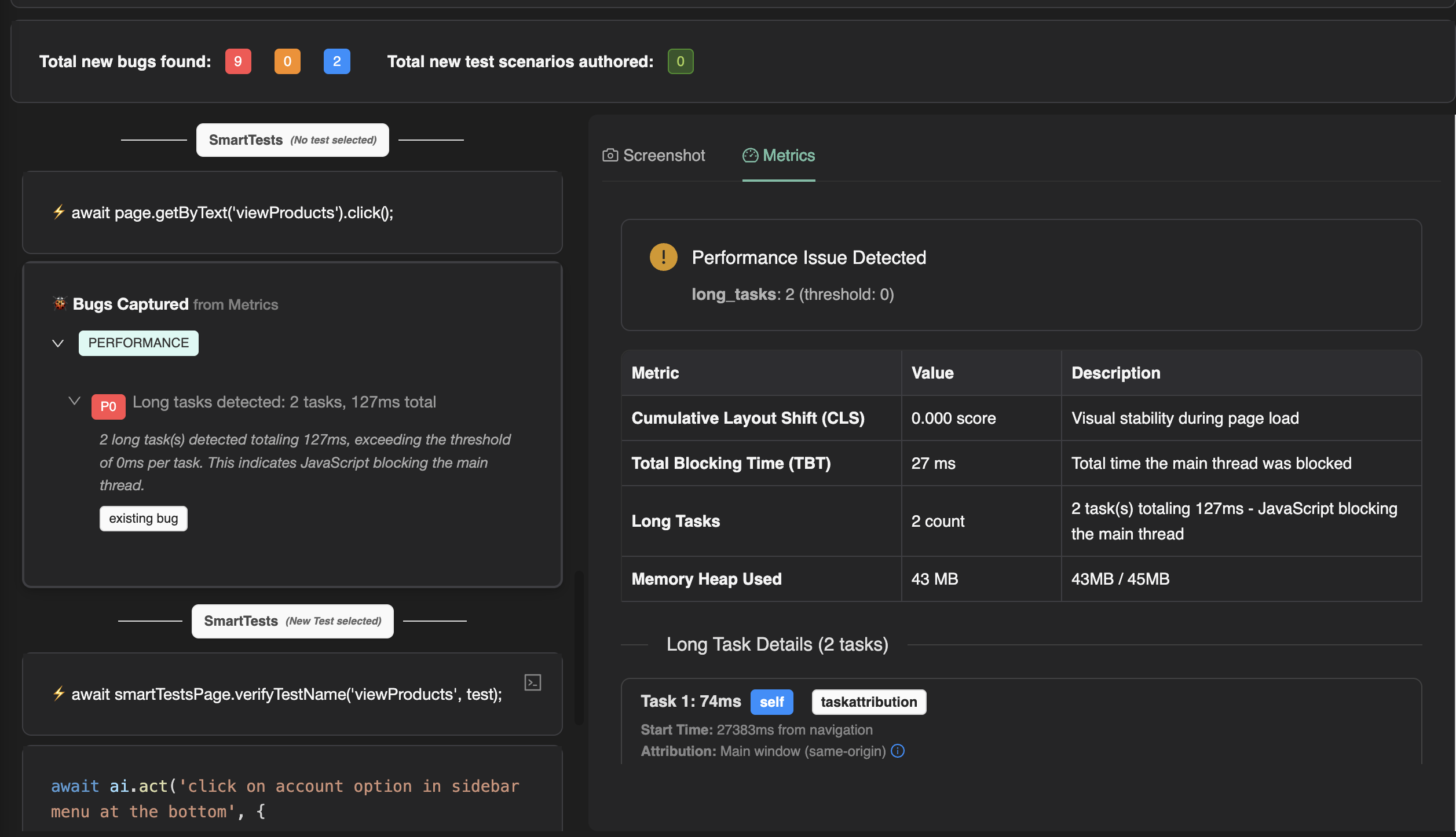

Automatically Expanding Your Test Suite

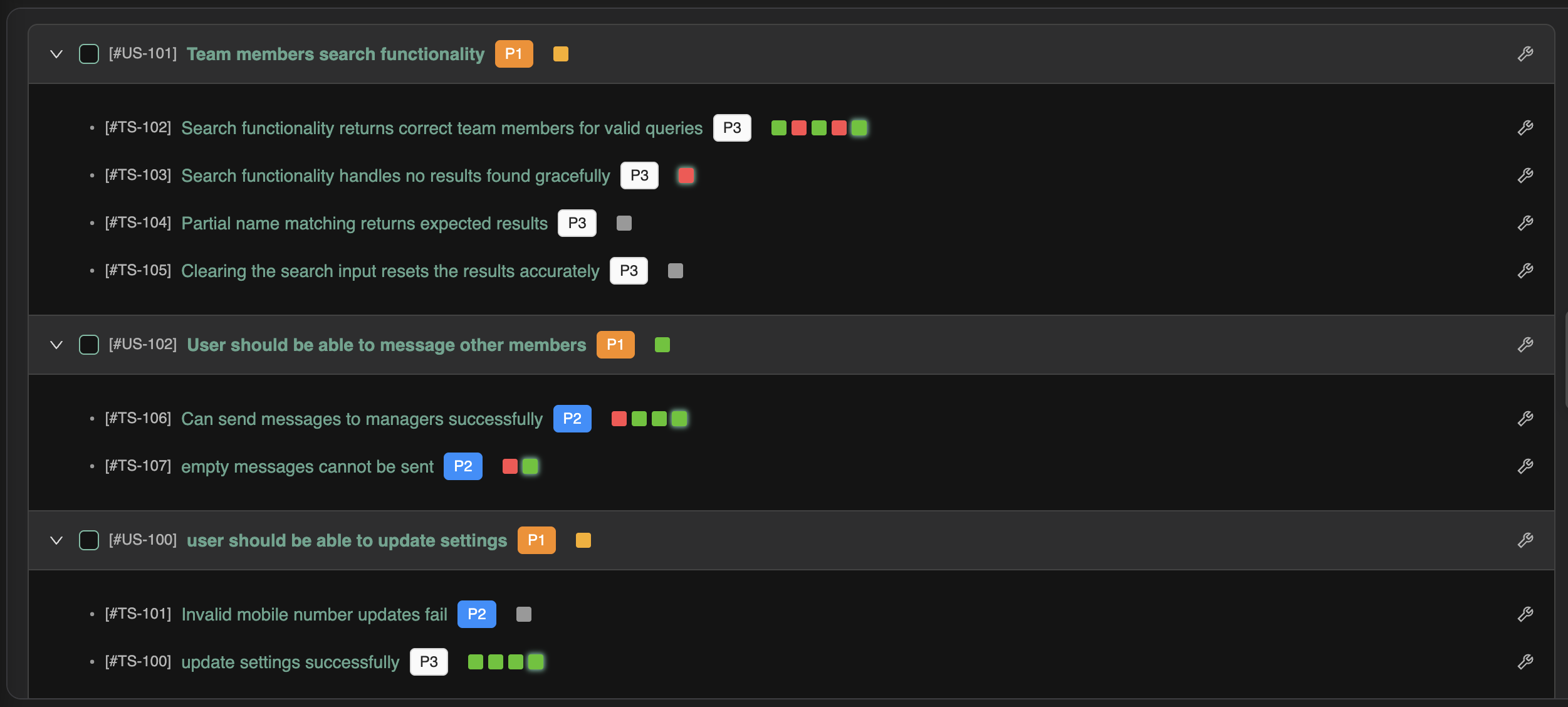

Once you have this_“pathway map,”_ an agent can intelligently expand your test suite by targeting untested branches.

To do this well, the agent needs two answers:

- How do I reach the required state?

- Which branches from that state are already covered?

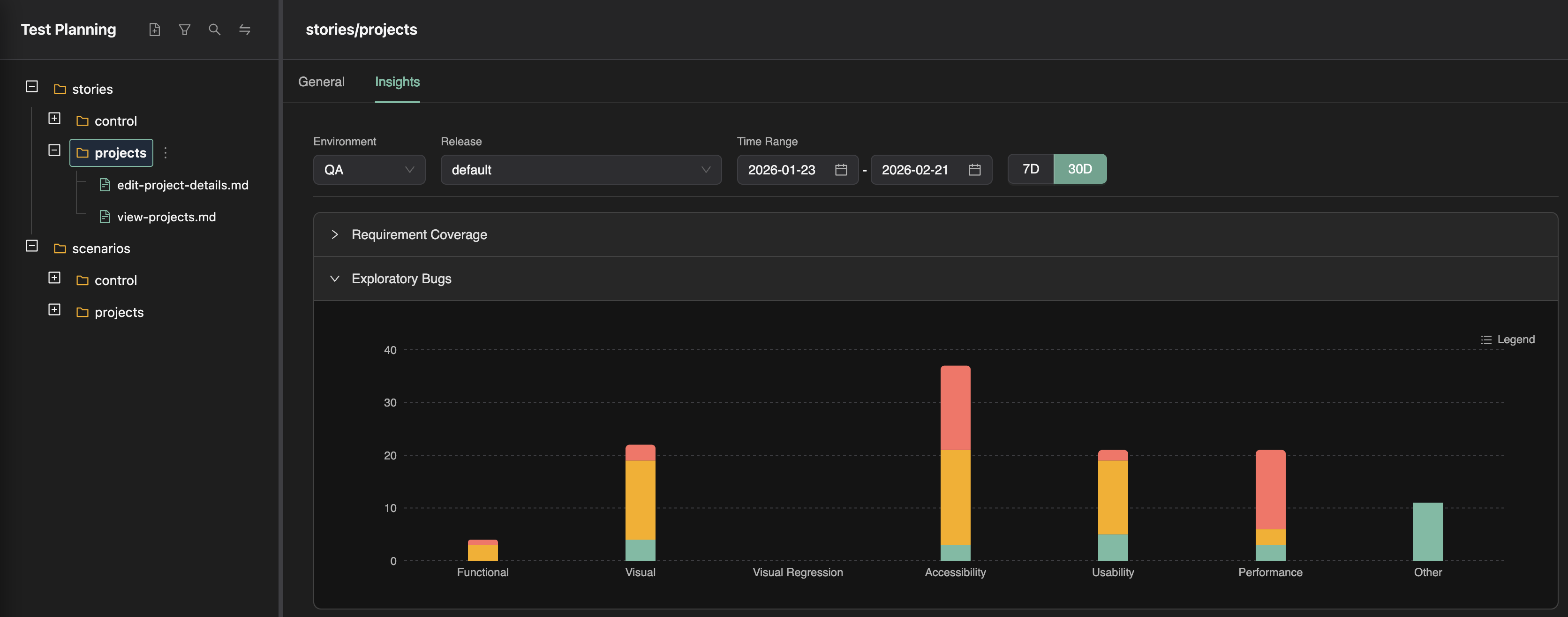

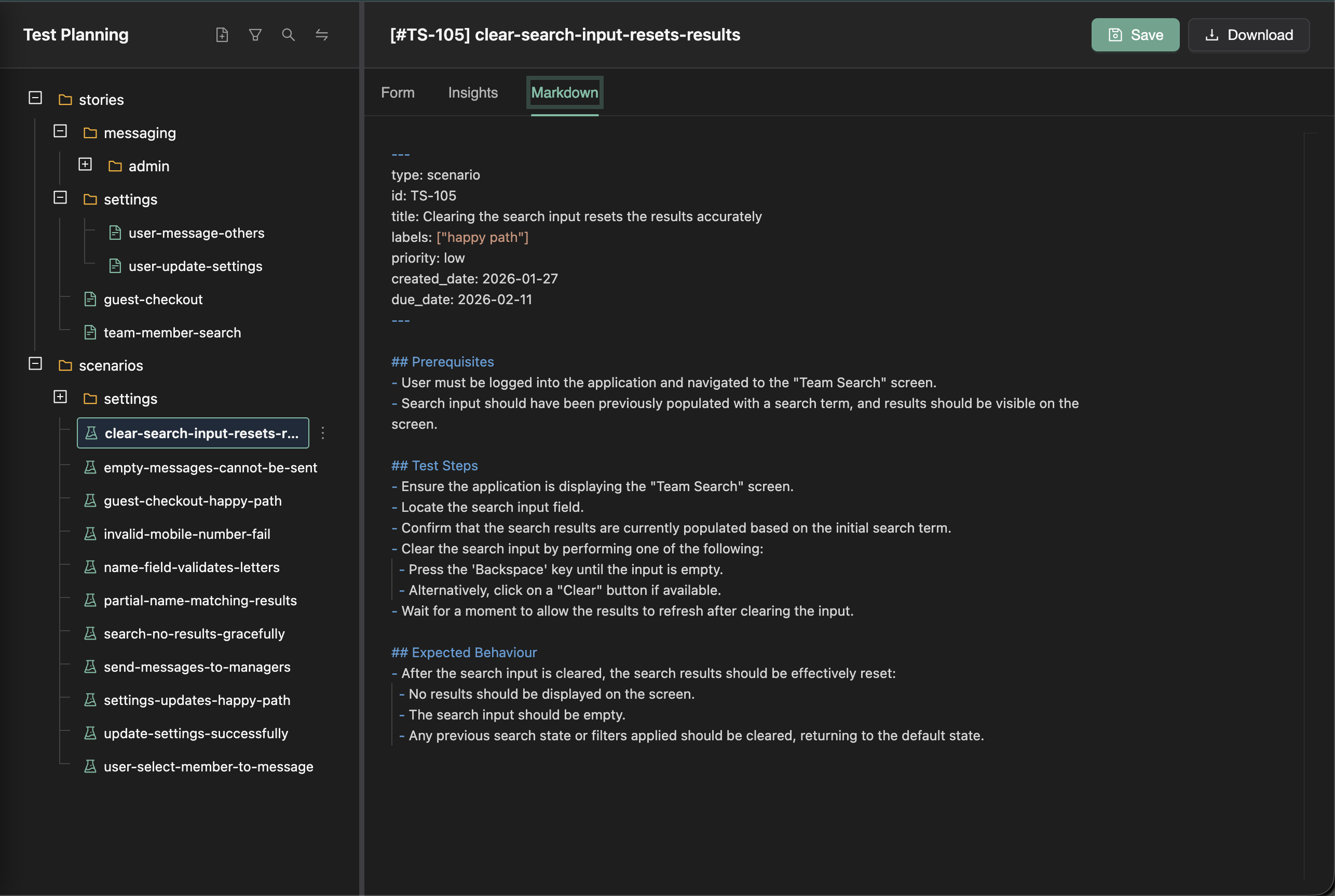

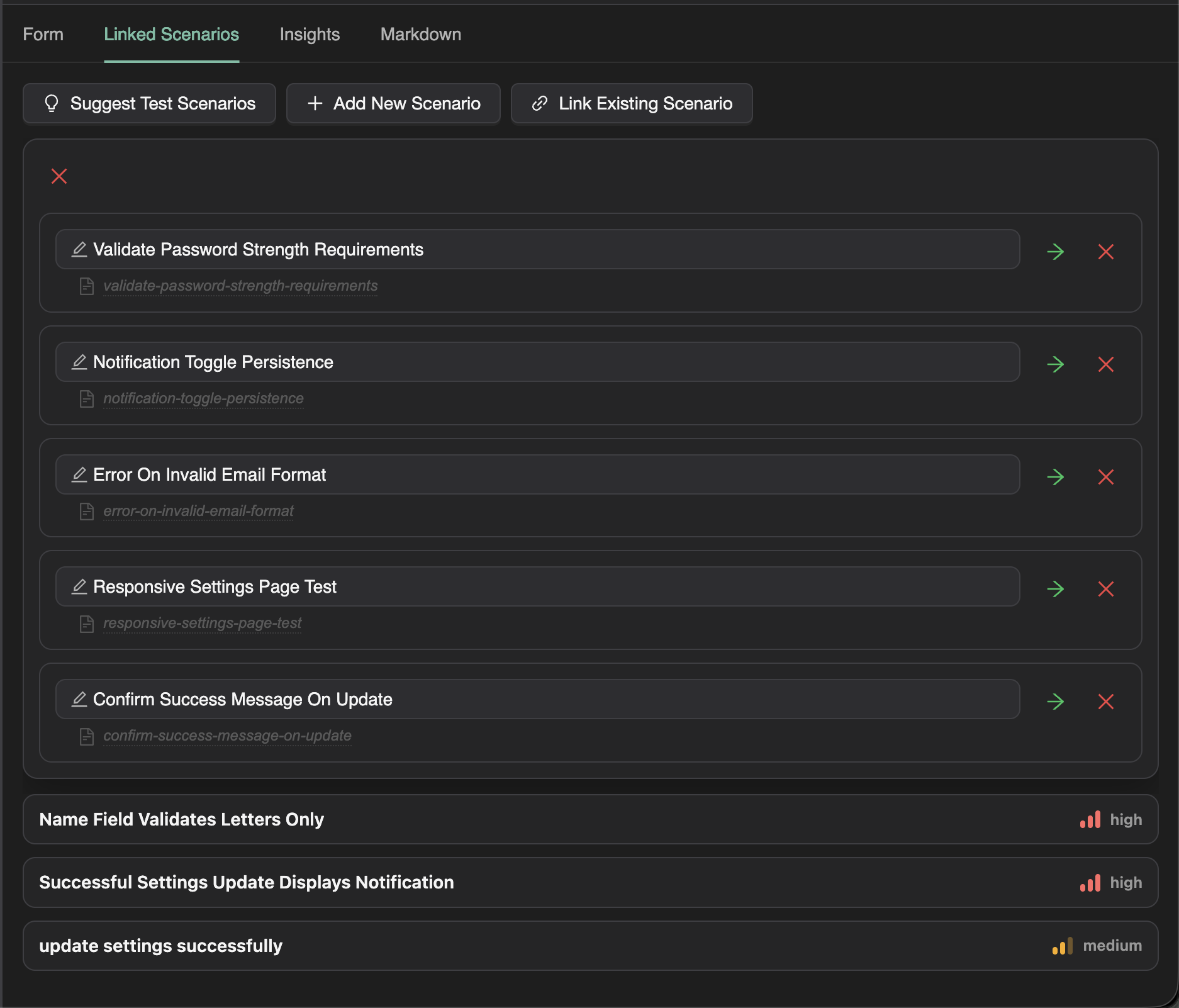

In TestChimp (under Atlas → Behaviour Tree), selecting any node shows:

the exact path from the root to that node (how to get there), and

all outgoing edges (which branches are already explored by existing tests).

From there, the agent simply:

-

Navigates to the node by following the script steps.

-

Look at the UI state.

-

Brainstorms unexplored actions (new branches).

-

Converts each unexplored branch into a new test.

In other words, the map gives the agent the same advantage a human has when using Google Maps – it can get anywhere, deliberately.

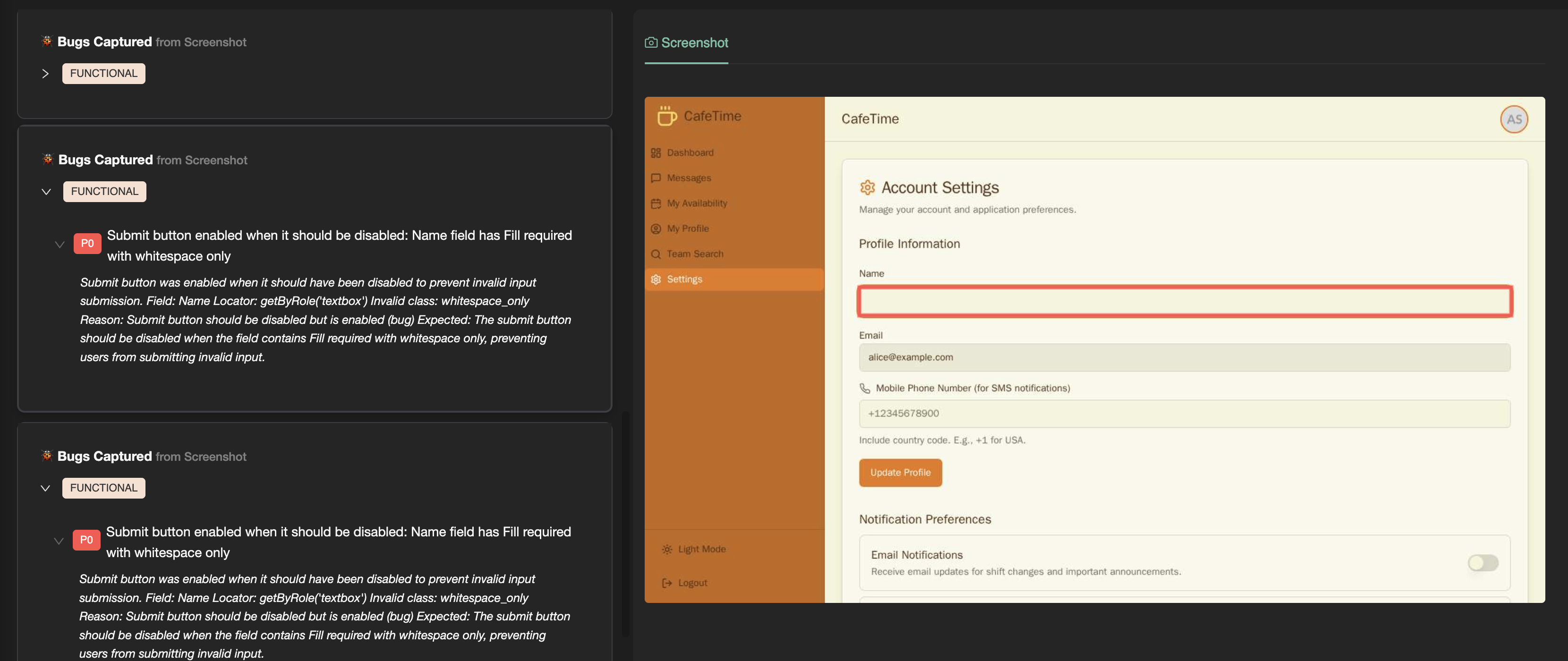

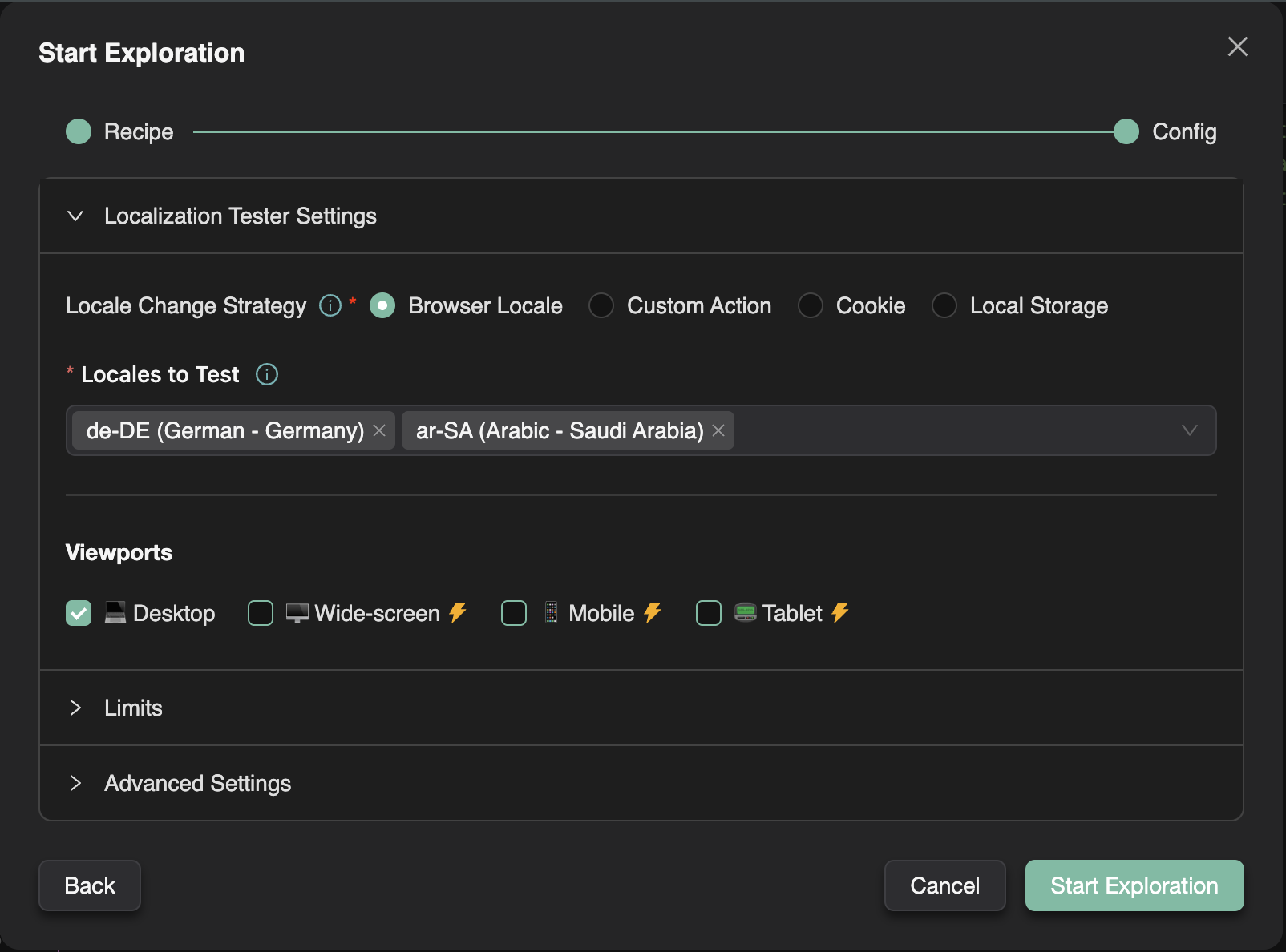

Controlled Agentic Exploration

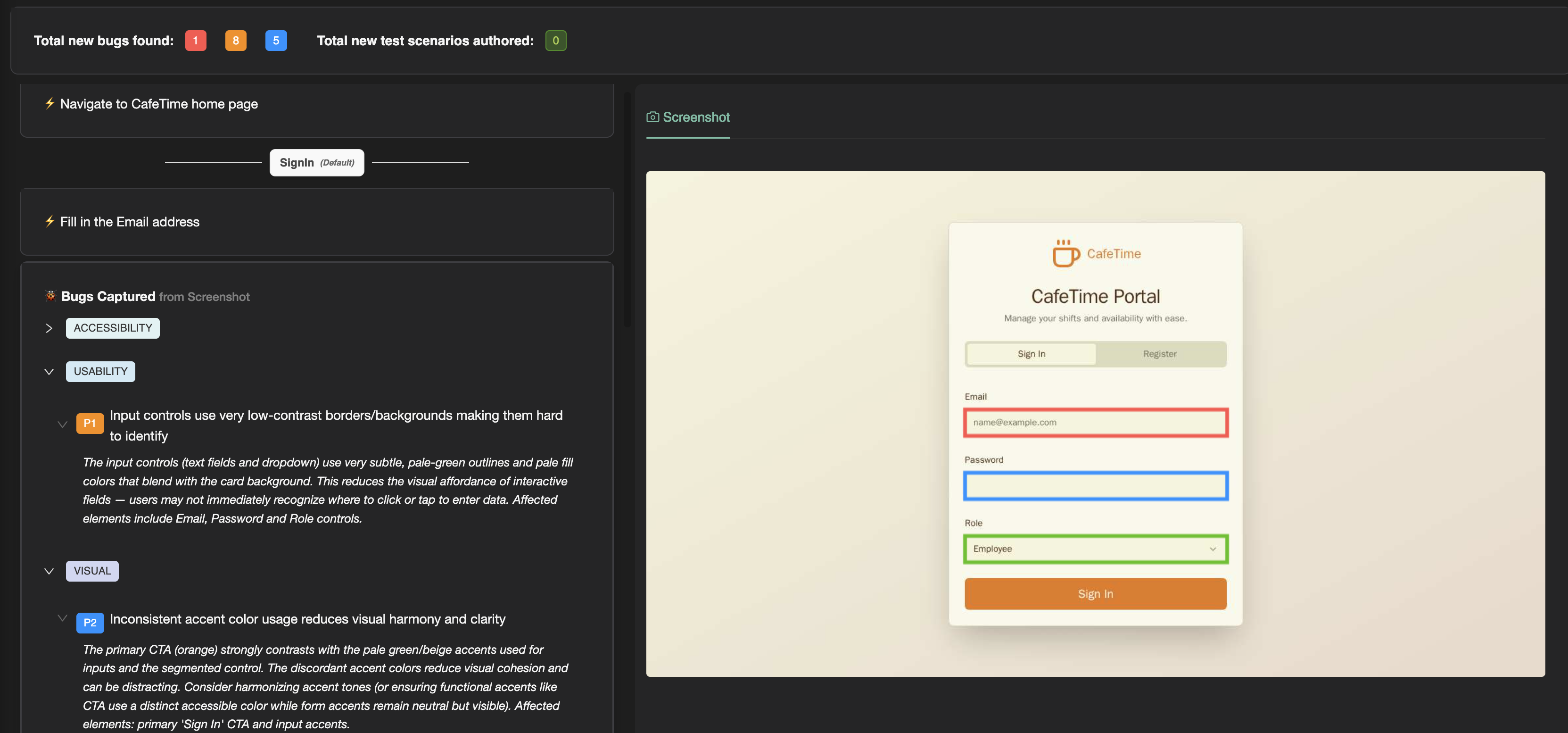

Agent-led exploratory testing can be powerful: the agent can analyze DOM, screenshots, network logs, and console output while walking through your app.

But in practice, fully-agentic exploration has challenges:

- Slow – inference happens at every step

- Easily distracted – coarse objectives lead to wandering

- Unfocused – without context, exploration becomes random

It’s like asking a human to explore an unfamiliar city with no map:

slow progress, random detours, and little sense of the big picture.

Your behavioural pathway graph is the map.

With it, the agent can:

- reason about where it is,

- figure out where to go next,

- and explore far more methodically.

You can even focus exploration narrowly – for example:

“Analyze the Settings page as an admin user.”

Because each step in the graph is annotated with the screen and state (from previous explorations), the agent can determine:

- how to reach that precise screen state, and

- how to explore meaningfully once there.

- To try variations (e.g., test different scenarios in Settings), the agent simply follows the shared trunk of paths that lead to that screen – much like several routes through a city share the same highway.

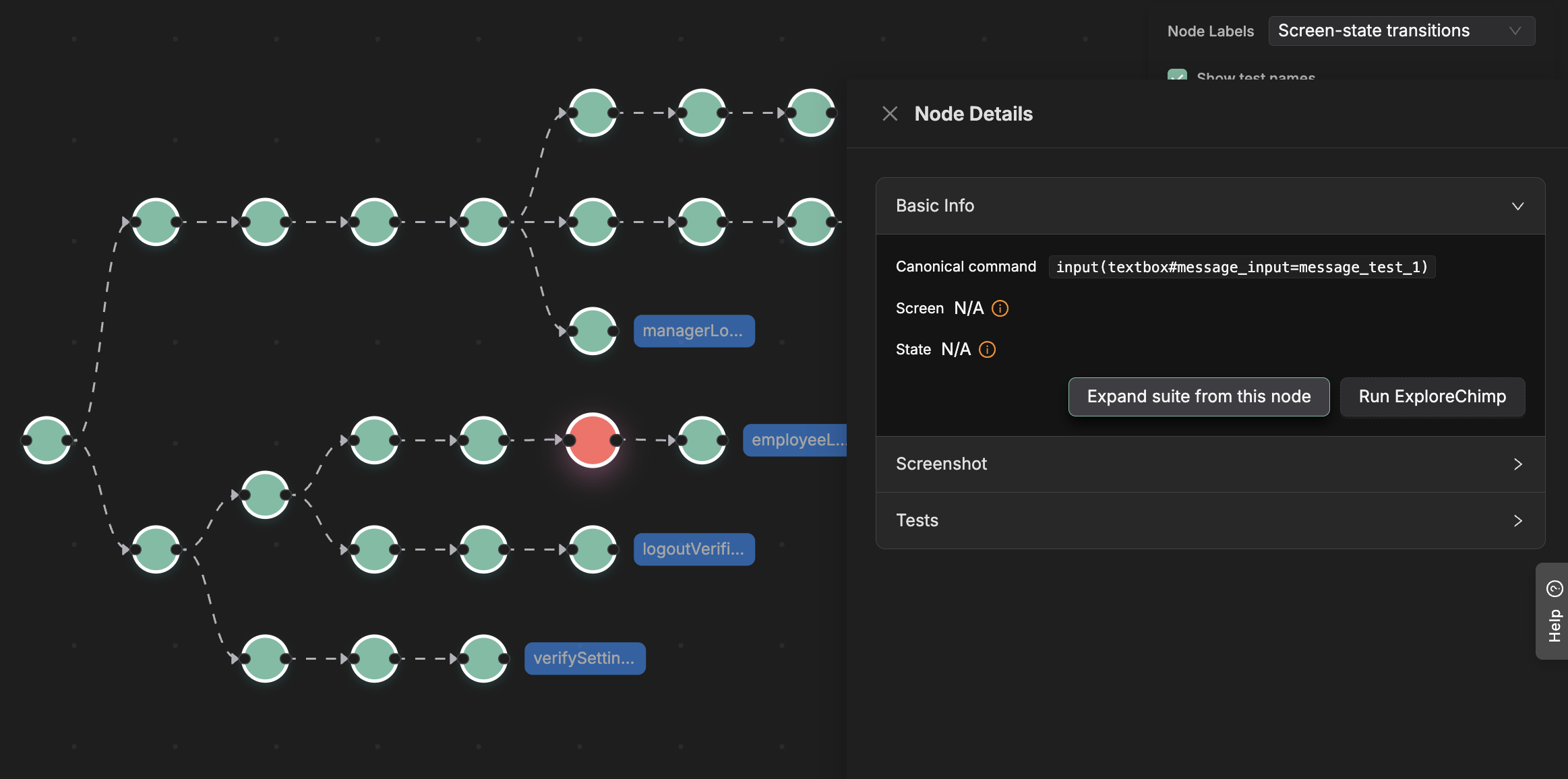

Bridging Pathways With App Structure: Screens & States

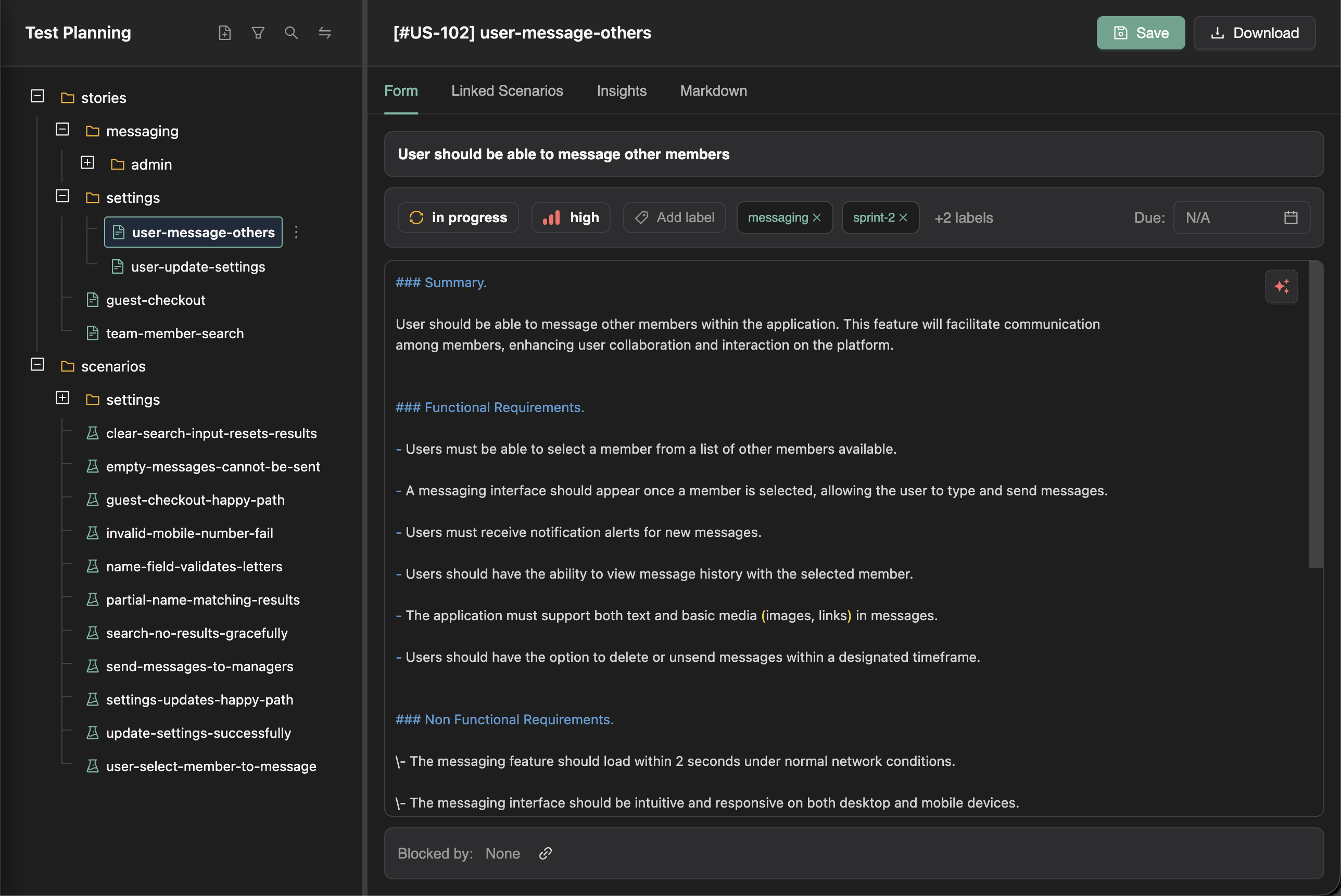

Throughout this post we’ve mentioned “screens” and “states.”

Here’s how they fit in.

A human knows, while navigating:

- “I’m on the login page”

- “Now I’m on the home page”

- “Now I’m in the settings page as an admin”

Traditional Playwright scripts do not carry that semantic information.

But an agent can.

As it walks through a test step-by-step, it can look at the UI and infer:

- Which screen am I on?

- What state am I in? (logged in, admin, item added, etc.)

This is exactly what ExploreChimp does.

During guided exploration, it maps each step to the screen and state the UI is currently in.

That enriched context enables the agent to answer questions like:

“How do I get to the Settings page as an admin user?”

“What screens does this test touch?”

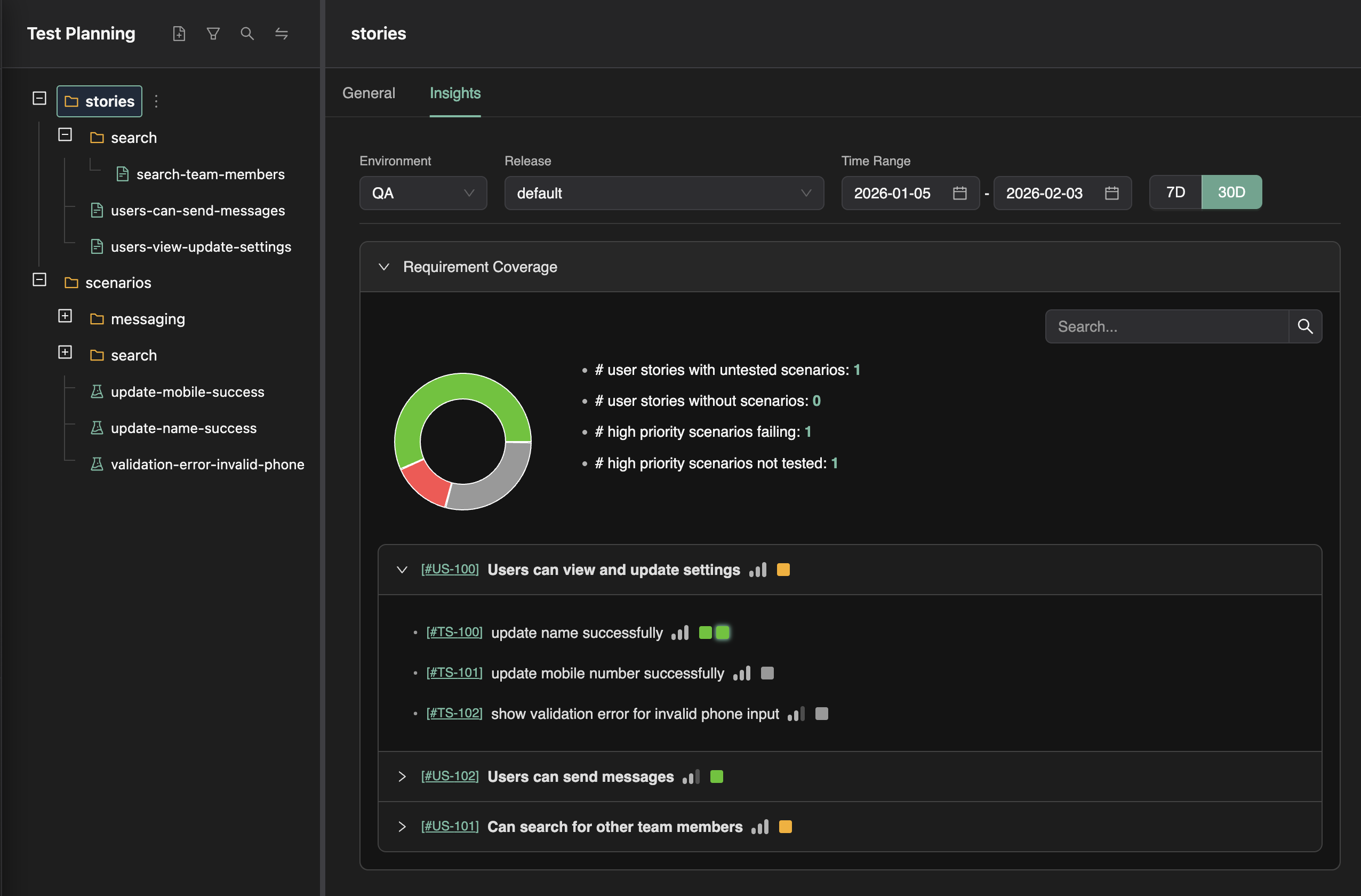

“Which parts of the product lack coverage?”

By connecting behavioural paths with semantic screen/state understanding, TestChimp gains a rich structural model of your app – fueling downstream capabilities like:

- generating user stories,

- planning test strategies,

- writing new tests,

- and performing targeted exploratory analysis.